Whether on the factory floor, in a medical device, or in an aircraft, real-time critical systems demand quick decision-making processes. They deploy closed-loop control, allowing only a tight time window to gather data, process that data, and update the system. The challenge for developers is that proving that execution times never exceed their allotted window is always a challenge, and doubly so when using multicore processors.

But there’s more to it than that. MCPs impact the whole development lifecycle. Parts of the development process become iterative, heightening the importance of requirements traceability. The removal of possible causes of execution time variability and unpredictability becomes paramount which makes correct data and control coupling analysis (DCCC) and state-of-the-art coding standards checking even more important. Efficient instrumentation techniques to minimise the impact on executing software become even significant because they have the potential to impact several processes concurrently. And so on…

That is why a holistic focus on the implications of MCPs throughout the development life cycle will always yield a better outcome than disproportionately heightening the significance of timing analysis and losing sight of the bigger picture.

Hard real-time systems need to satisfy stringent timing constraints which are derived from the systems they control. In general, upper bounds on the execution times are needed to show the satisfaction of these constraints. The primary challenge in leveraging the benefits of multicore processors in such circumstances is in guaranteeing sufficient time in all cases so that these upper bounds are never exceeded. Without that assurance, there is no guarantee that the software will always behave as expected – that is, it may not be deterministic.

Clearly that throws timing analysis into the spotlight, and of course it is an important consideration. But it’s not the only one. For example, the need for consistent execution times places new emphasis on particular aspects of code quality, which the iterative nature of interference analysis brings new challenges for project managers.

The use of MCPs impacts much of the development cycle. In some phases the impact is limited to subtle changes; in others, there are new ways of working to consider.

Static analysis contributes in two important ways.

Worst-Case Execution Time in general is easy to cope with if, for a given path through the code, it varies as little as possible. Even if the source code is optimal, the variation can be considerable if MCPs are involved – so the last thing you need is suboptimal code that contributes to that variation. The diligent application of coding standards (such as MISRA C/C++, JSF++ AV, and CERT C/C++ ) is good practice for many reasons, in this context not least because they help developers to avoid common coding pitfalls that might introduce performance bottlenecks or unpredictable behaviour.

There are long standing and proven mechanisms available to measure the properties of software code which are independent of their execution on any particular platform. For example, Halstead’s metrics reflect the implementation or expression of algorithms in different languages to evaluate the software module size, software complexity, and the data flow information. The TBvision component of the LDRA tool suite can calculate these metrics precisely.

The civil aviation standard DO-178C provides convenient definitions of data coupling and control coupling that are equally applicable elsewhere.

It states that that data coupling is “the dependence of a software component on data not exclusively under the control of that software component” and that control coupling is “the manner or degree by which one software component influences the execution of another software component”.

The aim of DCCC analysis is to show that that the software modules affect one another only in the ways intended by the software design, ensuring that there are no unplanned, anomalous, or erroneous behaviours. It is important in this context because unplanned, anomalous, or erroneous behaviours will impact timing, as underscored by its inclusion in the MCP specific document A(M)C 20-193.

The Worst-Case Execution Time (WCET) of a computational task is the maximum length of time that task could take to execute in a specific environment.

Suppose that a real-time system runs on a single-core processor and consists of several tasks, running concurrently. The diagram depicts several relevant properties of one of those real-time tasks.

The WCET is the longest execution time possible for a program, and the BCET (Best Case Execution Time) is the shortest. The pertinent calculations must consider all possible inputs, including specification violations.

Developers must be aware of the Worst-Case Execution Time (WCET) to ensure that the timing window allocated to the task is adequate in all cases.

The contention that results from threads looking to access these Hardware Shared Resources (HSRs) means that an iterative development process that tunes the code and environmental settings to optimize the balance between in different factors is necessary.

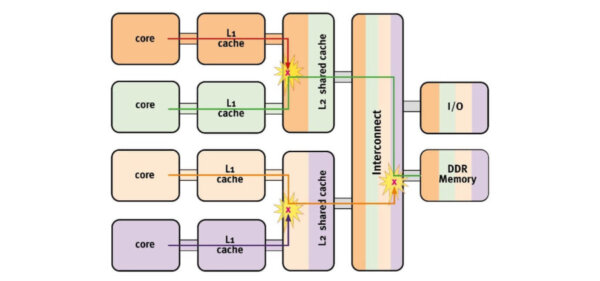

On a single-core processor, resources like memory are dedicated solely to that core. When additional cores are introduced, these resources must be shared among them, leading to time-related delays as users wait for access.

For instance, the diagram illustrates that when hierarchical memory is shared, interference can occur in multiple areas. These interference channels cause the execution-time distribution to widen, resulting in a broad distribution with a long tail instead of a sharp peak.

While this issue is generally insignificant outside of safety-critical applications, it becomes crucial where both safety and precise timing are essential.

In a critical application, it is often vitally important that the timing window allocated to each task is always big enough. To be sure of that, developers must be able to quantify the Worst-Case Execution Time (WCET). Worst-case execution time calculation traditionally involves calculations and/or measurements aimed at achieving that end.

Unlike single processor applications, the task of finding a schedule of X tasks on Y processor cores such that all tasks meet their deadline has no efficient algorithm. Combined with the challenge of multicore interference channels demands a rethink of many established practices reliant upon the constraints implied by single-core devices.

The LDRA tool suite leverages the TBwcet module to provide a unique, “wrapper” based approach to WCET analysis by measurement. The core of that approach is a tool based on proven technology, and that is available for developers to use without committing to a consultancy package – although that support is available if needed.

Despite this sound foundation, the empirical nature of verification and validation in the multiprocessor environment as compared to single core makes it imperative that project managers can adapt readily to changing requirements and configurations.

As illustrated below, the iterative nature of interference research to minimize the impact of interference channels makes it important to integrate feedback loops into the development process.

Dan Iorga et al. recommend a slow and steady approach to measuring and tuning interference. It is highly likely that such interference research will lead to changes in system or software requirements, and conversely that changes in system functional requirements will drive new interference channels or affect existing ones.

Efficient instrumentation is not the preserve of Multicore Processors. Efficient instrumentation has always been required for requirements-driven structural coverage analysis to minimize its effect on the behaviour of the executable. The LDRA tool suite has always been MCP-ready in this regard.

DO-178, IEC 61508, ISO 26262 and other safety-related standards require that structural coverage analysis is to be performed. Structural coverage data is collated during requirements-based test procedures. The execution of an “instrumented” copy of the source code records the paths taken during execution, and the resulting output submitted for Structural Coverage Analysis (SCA).

Coverage analysis at the software level requires instrumentation probes within the code to track execution. That inevitably has an impact on both performance and resources.

It is necessary to minimize that impact for instrumentation to be effective in a multicore system. LDRA’s instrumentation techniques rely on probes inserted into each basic block, meaning that there are as few of them as possible

With the number of probes minimized, it is also important to the memory required to store the coverage data. Bit-packed storage uses individual bits in a word that correspond to the probe location. That location is pre-calculated, saving execution time.

In a multicore system, collisions are possible. If more than one core writes to the same location, all but the first attempt will fail. However, embedded systems generally run in a loop. It is therefore highly likely that probes will execute repeatedly, and coverage that is unrecorded due to a collision will be recorded on a subsequent pass. Even failing that, the coverage reported is failsafe, because it can never overstate the amount of code exercised.

The alternative approach, involving semaphores or mutexes, would have a significant performance overhead. The LDRA tool suite retains the use of such mechanisms for MC/DC analysis, where atomic locking ensures the accuracy and completeness of results.

For interference research analysis, timing is the critical metric of interest rather than code coverage. In this case, similarly efficient instrumentation is used to time the duration of each cycle, making negligible impact on the duration of each loop. It is possible to leverage code coverage analysis during the process which can help with research, but excluding code coverage specific instrumentation analysis will facilitate more representative timings.

Keeping track of any iterative process needs careful project management and interference analysis is no exception. Under these circumstances, an automated mechanism is vital to keep track of what needs revisiting for renewed verification and validation, and hence to keep the project on schedule and within budget. The TBmanager component of the LDRA tool suite provides just such a mechanism.

DO-178C establishes a need for the analysis of WCET, highlighting it in §6.3 (Software Reviews and Analyses), §6.3.4 (Reviews and Analyses of Source Code), and §11.20 (Software Accomplishment Summary).

For many years, the use of multicore processors in civil aviation has been restricted to the use of a single core only due to concerns over some characteristics of multicore processors. CAST-32A was an advisory document written to be supplemental to DO-178C, and to “[provide] a rationale that explains why these topics are of concern and [propose] objectives to address the concern.”

Standard-specific compliance reporting for DO-178C is supported by the LDRA tool suite.

CAST-32A and its successor documents, AMC 20-193 and AC 20-193, also supported by the TBmanager component of the LDRA tool suite, include detailed guidance on the criteria that are to be satisfied if multicore processors are to be deployed in DO-178C compliant applications.

Real-time critical systems require rapid decision-making processes. They use closed-loop control, which allows only a brief time window to collect data, process it, and update the system. Developers face the challenge of ensuring that execution times never exceed their allotted window, a task that becomes even more difficult with multicore processors.

However, the impact of multicore processors extends beyond this. They affect the entire development lifecycle, making parts of the process iterative and increasing the importance of requirements traceability. Eliminating potential causes of execution time variability and unpredictability becomes crucial, emphasizing the need for accurate data and control coupling analysis (DCCC) and adherence to advanced coding standards. Efficient instrumentation techniques are also vital to minimize their impact on executing software, as they can affect multiple processes simultaneously.

Therefore, maintaining a holistic focus on the implications of multicore processors throughout the development lifecycle will yield better outcomes than overly emphasizing timing analysis and neglecting the broader picture.

Technical white paper: Developing compliant critical software systems with multicore processors

Technical briefing: Getting to grips with A(M)C 20-193

Technical white paper: Following the recommendations of A(M)C 20-193

Email: info@ldra.com

EMEA: +44 (0)151 649 9300

USA: +1 (855) 855 5372

INDIA: +91 80 4080 8707